Hierarchical Clustering: Revealing Data's Inner Architecture Through Nested Patterns

Introduction to Hierarchical Clustering

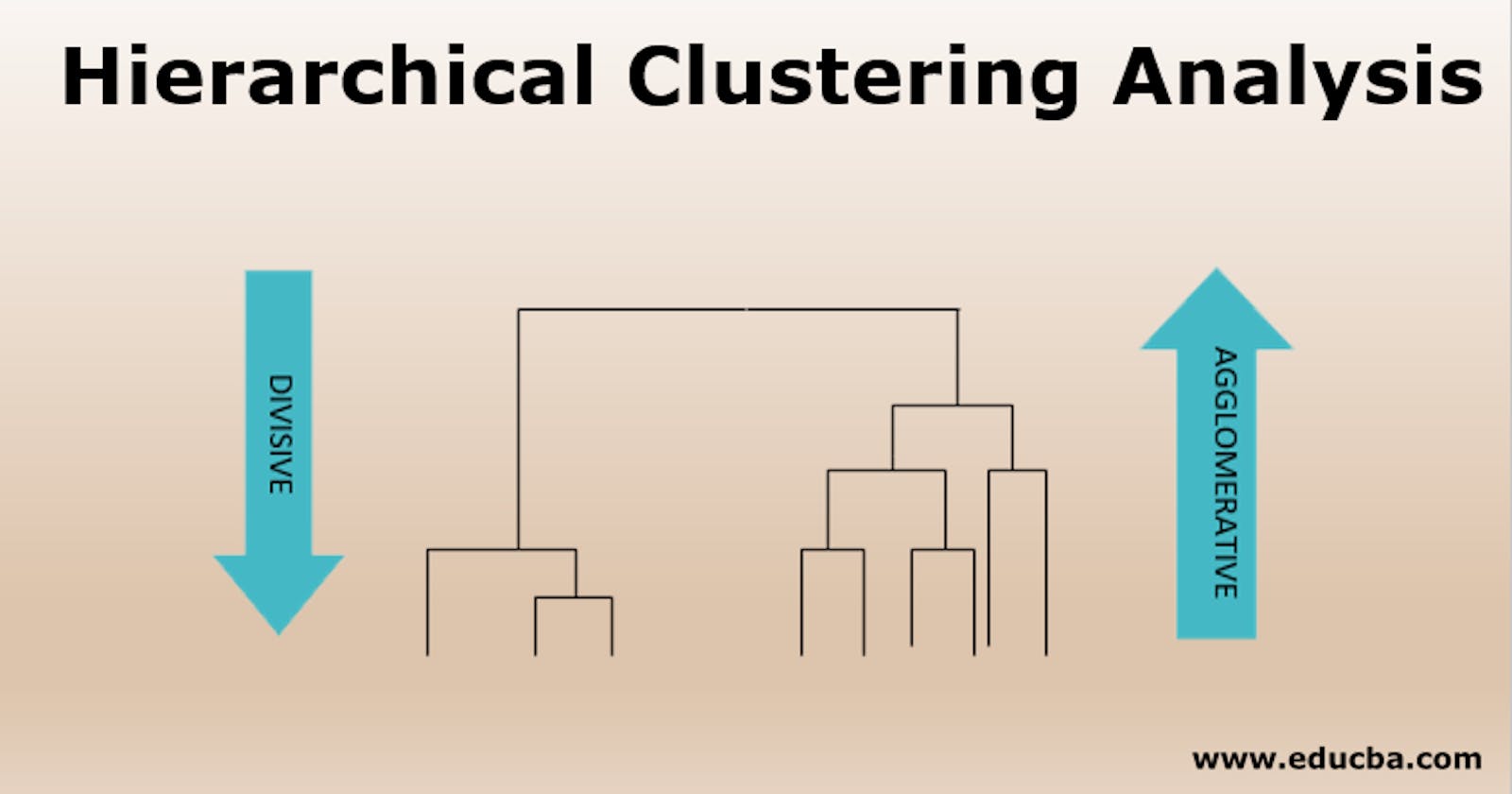

Hierarchical clustering is an unsupervised learning technique that arranges data points into a tree-like structure of clusters. Unlike K-Means clustering, which assigns data points to a fixed number of clusters, hierarchical clustering offers a more flexible approach. It provides a visual representation of how data points are related to one another, revealing clusters at different levels of granularity.

The Hierarchical Clustering Process

The hierarchical clustering process involves the following steps:

Data Preparation: Gather and preprocess the data, ensuring it is suitable for clustering analysis.

Distance Computation: Calculate the distance or dissimilarity between data points using a chosen distance metric (e.g., Euclidean distance or correlation distance).

Cluster Initialization: Start with each data point as an individual cluster or group.

Merge Clusters: Iteratively merge the two closest clusters into a single cluster until all data points belong to a single cluster.

Types of Hierarchical Clustering

There are two main approaches to hierarchical clustering:

Agglomerative Hierarchical Clustering: This "bottom-up" approach starts with individual data points and iteratively merges them into larger clusters. It is the most common approach.

Divisive Hierarchical Clustering: This "top-down" approach starts with all data points in a single cluster and then recursively divides the clusters into smaller ones.

Creating Dendrograms

A dendrogram is a tree-like diagram that illustrates the hierarchy of clusters formed during hierarchical clustering. It provides insights into the relationships between data points and the structure of the resulting clusters. The vertical axis of a dendrogram represents the distance between clusters, while the horizontal axis represents individual data points or clusters.

Determining the Number of Clusters

Hierarchical clustering allows for an intuitive visualization of the data's structure at various levels. The optimal number of clusters can often be identified by observing the dendrogram and looking for a point where clusters start to merge less clearly.

Applications of Hierarchical Clustering

Hierarchical clustering finds its utility in diverse fields:

Biology: It helps classify species based on genetic similarities and create phylogenetic trees.

Image Segmentation: Hierarchical clustering can group pixels in an image to identify objects or regions.

Market Segmentation: Businesses use it to segment customers based on purchasing behaviors for targeted marketing strategies.

Document Clustering: It aids in grouping similar documents for tasks like topic modeling.

Neuroscience: Hierarchical clustering is used to analyze brain activity patterns and neuronal connections.

Strengths and Limitations

Hierarchical clustering's strengths include its ability to capture intricate data relationships and provide a detailed visual representation. However, it may struggle with very large datasets due to its computational complexity. Additionally, the choice of distance metric and linkage criteria can impact the results.

Conclusion

Hierarchical clustering stands as a dynamic technique that delves deep into the essence of data relationships. By revealing nested patterns and offering a visual narrative of these relationships, it empowers data analysts and scientists to navigate intricate datasets with finesse. As technology advances and data complexity grows, hierarchical clustering remains an invaluable tool for illuminating the inner workings of diverse datasets, shining a light on the hidden connections that drive the world of data-driven insights.