Introduction

In the realm of artificial intelligence and reinforcement learning, the Q-learning algorithm stands as one of the foundational pillars. Developed by Christopher Watkins in his PhD thesis in 1989, Q-learning has since become a cornerstone of modern machine learning and has been employed in various applications, from robotics and gaming to optimization and decision-making processes. This article aims to provide a comprehensive overview of the Q-learning algorithm, its underlying principles, and its significance in the field of artificial intelligence.

Reinforcement Learning: A Brief Overview

Before delving into Q-learning, let's establish the context by understanding the broader concept of reinforcement learning (RL). Reinforcement learning is a subfield of machine learning where an agent learns to make sequential decisions by interacting with an environment. The agent takes actions to maximize a cumulative reward over time. The key components of reinforcement learning include:

Agent: The learner or decision-maker that interacts with the environment.

Environment: The external system with which the agent interacts.

State: A representation of the current situation of the agent within the environment.

Action: The decision or choice made by the agent to transition from one state to another.

Reward: A numerical signal received by the agent that indicates the immediate benefit or desirability of an action.

Q-Learning: The Basics

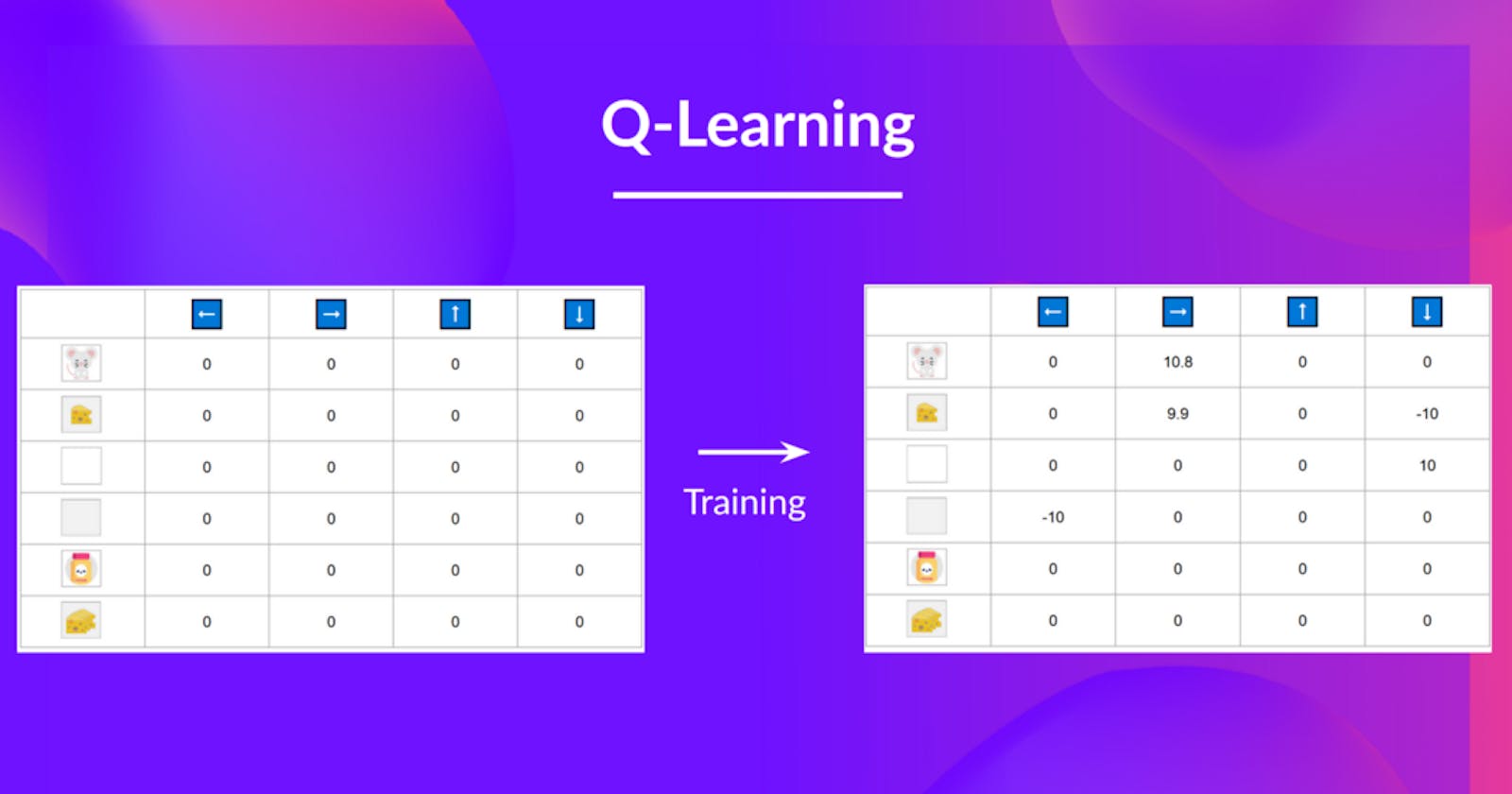

Q-learning is a model-free reinforcement learning algorithm that aims to find an optimal policy for an agent to take actions in an environment in order to maximize cumulative rewards. The core idea behind Q-learning is to learn a Q-function (also known as action-value function) that estimates the expected cumulative reward of taking a specific action in a given state and following the optimal policy thereafter.

Mathematically, the Q-function is defined as follows:

Q(s, a) = Q(s, a) + α * [r + γ * max(Q(s', a')) - Q(s, a)]

Where:

Q(s, a)represents the Q-value of taking actionain states.αis the learning rate, controlling the weight given to the new information.ris the immediate reward received after taking actionain states.γis the discount factor, which trades off immediate and future rewards.max(Q(s', a'))is the maximum Q-value among possible actions in the next states'.

The algorithm iteratively updates the Q-values based on the observed rewards and transitions. Over time, the Q-values converge to the optimal values, guiding the agent towards making informed decisions.

Exploration vs. Exploitation

An essential aspect of Q-learning is the balance between exploration and exploitation. Exploration involves trying new actions to discover potentially better strategies, while exploitation involves choosing actions that are known to yield high rewards based on current knowledge.

To balance these two conflicting objectives, many Q-learning implementations use an epsilon-greedy policy. This means that the agent selects the action with the highest Q-value most of the time (exploitation), but occasionally takes a random action (exploration) with a probability of ε. This ensures that the agent continues to explore new possibilities while gradually exploiting the learned information.

Applications and Impact

The Q-learning algorithm has found numerous applications across various domains:

Game Playing: Q-learning has been employed in developing AI agents that excel in playing games like chess, Go, and Atari games. Notable examples include DeepMind's AlphaGo and OpenAI's DQN (Deep Q-Network).

Robotics: Q-learning is used in training robots to learn tasks and navigate complex environments autonomously, enabling applications in industrial automation, healthcare, and more.

Optimization: Q-learning can be applied to solve optimization problems, such as resource allocation, scheduling, and route planning.

Finance: Q-learning can aid in portfolio optimization, risk management, and trading strategies in financial markets.

Control Systems: Q-learning has been applied to control systems in engineering, optimizing parameters for processes and systems.

Conclusion

In the ever-evolving landscape of artificial intelligence and reinforcement learning, the Q-learning algorithm has emerged as a powerful tool for training agents to make intelligent decisions in dynamic environments. Its ability to balance exploration and exploitation, along with its diverse applications, has solidified its role as a fundamental technique in the field. As technology advances and new challenges arise, Q-learning continues to pave the way for innovative solutions and insights, shaping the future of intelligent decision-making systems.